Rift — Immersive Rhythm Installation with LED Volume Visualization

Project Overview

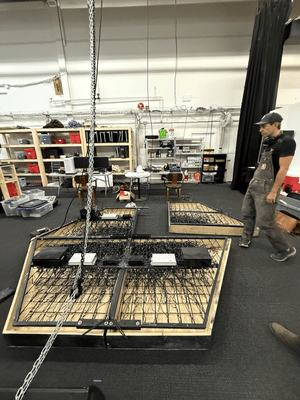

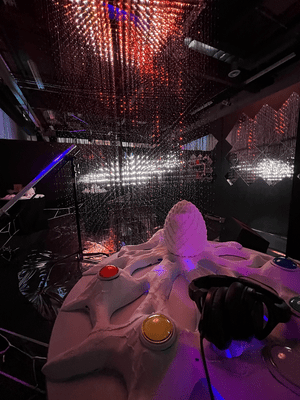

Rift is an immersive rhythm installation created for Signal 2025, in collaboration with Vancouver-based artist Tyler (tybot.ca). Using Tyler’s stunning Voxelite LED display as the central visual element, we designed and built four interactive rhythm-game tables, allowing players to perform rhythm actions on their stations while enjoying synchronized real-time visuals on the Voxelite.

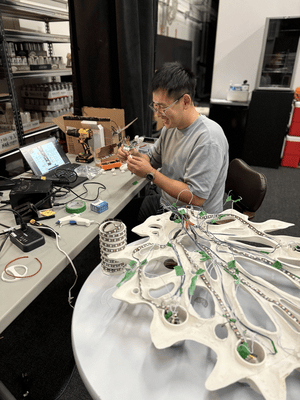

Each table features five illuminated buttons, each paired with an in-flow and out-flow LED strip to indicate note timing and hit feedback. Players must press the correct button at the right moment—similar to Guitar Hero, but reimagined as a tabletop experience.

Narratively, each table includes a symbolic “egg”: players are simultaneously hatching a mythical creature while battling an evil beast displayed on the main LED wall.

Successful hits generate projectiles from the player’s station, damaging the central beast. As players maintain accuracy, their egg gradually charges until it releases a final missile strike for massive damage.

Technical Details

The rhythm-game core logic was developed in Unreal Engine by our team member Brian.

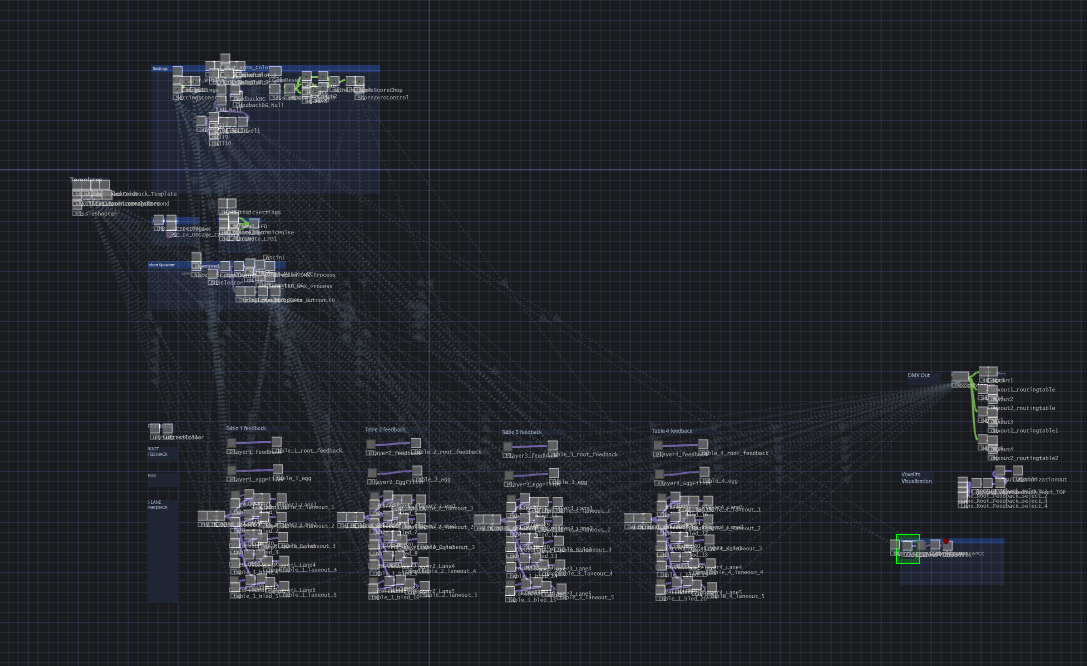

I was responsible for the electronic hardware, programming, and visual system design, including data flow and LED behaviors. All visual and control logic was implemented in TouchDesigner, communicating with Unreal Engine through OSC for button events and hit feedback.

Each table is powered by an ESP32-C3 controller with a built-in Ethernet interface—crucial for achieving the low latency required for rhythm-based gameplay. We used the DMX sACN protocol for LED communication and OSC for button-press events.

Due to the ESP32-C3’s limited resources and the high density of lighting data, I also customized the LED driver, reducing the number of network channels from the default 8 to 2, increasing buffer size per channel. One channel is dedicated to OSC communication, and the other to DMX, ensuring stable real-time performance.

Additional Visual System Details

For the Voxelite visuals, I used a hybrid approach combining both pre-rendered content and real-time rendering inside TouchDesigner.

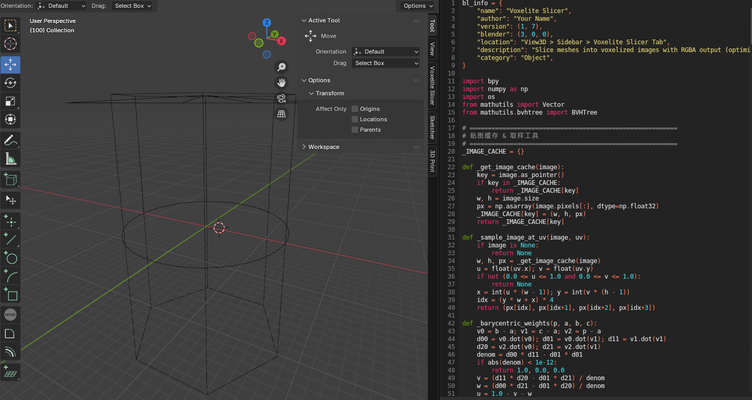

For the pre-rendered visuals, I developed a custom Blender plugin that slices and samples voxel data directly from 3D scenes, outputting pixel values that match the Voxelite’s 26 × 26 × 40 resolution. This allowed me to bake complex elements—such as skeletal animations, creature movements, and dense particle effects—into optimized volumetric frames.

For the real-time components, including projectiles, missile effects, and various interactive transitions, I built a mapping system in TouchDesigner that accurately translates 3D coordinates into the Voxelite’s voxel grid. Since real-time volumetric rendering can be computationally intensive, the hybrid workflow ensures that only interactive elements are computed live, while heavy visuals are handled by the pre-render pipeline.